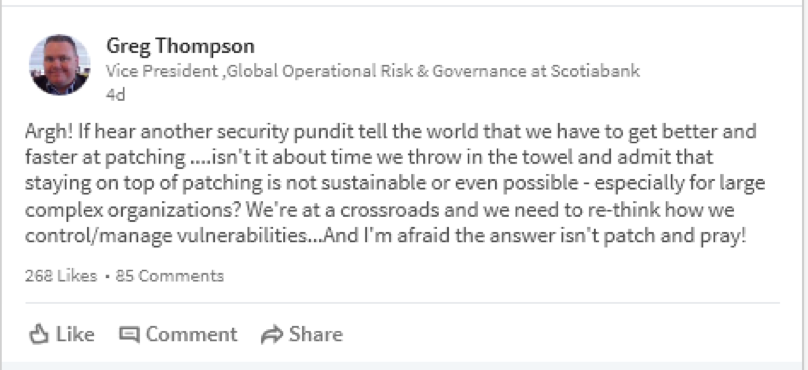

When WannaCry ransomware spread throughout the world last year by exploiting vulnerabilities for which there were patches, we security “pundits” stepped up the call to patch, as we always do. In a post on LinkedIn Greg Thompson, Vice President of Global Operational Risk & Governance at Scotiabank expressed his frustration with the status quo.

Greg isn’t wrong. Deploying patches in an enterprise department requires extensive testing prior to roll out. However, most of us can patch pretty quickly after an announced patch is made available. And we should do it!

There is a much larger issue here, though. A vulnerability can be known to attackers but not to the general public. Managing and controlling vulnerabilities means that we need to prevent the successful exploitation of a vulnerability from doing serious harm. We also need to prevent exploits from arriving at a victim’s machine as a layer of defense. We need a layered approach that does not include a single point of failure–patching.

A Layered Approach

First off, implementing a security awareness training program can help prevent successful phishing attacks from occurring in the first place. The 2017 Verizon Data Breach Investigations Report indicated that 66% of data breaches started with a malicious attachment in an email—i.e. phishing. Properly trained employees are far less likely to open attachments or click on links from phishing email. I like to say that the most effective antimalware product is the one used by the best educated employees.

In order to help prevent malware from getting to the users to begin with, we use reputation systems. If almost everything coming from http://www.yyy.zzz is malicious, we can block the entire domain. If much of everything coming from an IP address in a legitimate domain is bad, then we can block the IP address. URLs can be blocked based upon a number of attributes, including the actual structure of the URL. Some malware will make it past any reputation system, and past users. This is where controlling and managing vulnerabilities comes into play.

The vulnerability itself does no damage. The exploit does no damage. It is the payload that causes all of the harm. If we can contain the effects of the payload then we are rethinking how we control and manage vulnerabilities. We no longer have to allow patches (still essential) to be a single point of failure.

Outside of offering detection and blocking of malicious files, it is important to stop execution of malware at runtime by monitoring what it’s trying to do. We also log each action the malware performs. When a piece of malware does get past runtime blocking, we can roll back all of the systems changes. This is important. Simply removing malware can result in system instability. Precision rollback can be the difference between business continuity and costly downtime.

Some malware will nevertheless make it onto a system and successfully execute. It’s at this point we observe what the payload is about to do. For example, malware that tries to steal usernames and passwords is identified by the Webroot ID shield. There are behaviors that virtually all keyloggers use, and Webroot ID Shield is able to intercept the request for credentials and returns no data at all. Webroot needn’t have seen the file previously to be able to protect against it. Even when the user is tricked into entering their credentials, the trojan will not receive them.

There is one essential final step. You need to have offline data backups. The damage ransomware does is no different than the damage done by a hard drive crash. Typically, cloud storage is the easiest way to automate and maintain secure backups of your data.

Greg is right. We can no longer allow patches to be a single point of failure. But patching is still a critical part of your defensive strategy. New technology augments patching, it does not replace it and will not for the foreseeable future.

What do you think about patch and pray? Join our discussion in the Webroot Community or in the comments below!