Pro tips for backing up large datasets

Successfully recovering from disruption or disaster is one of an IT administrator’s most critical duties. Whether it’s restoring servers or rescuing lost data, failure to complete a successful recovery can spell doom for a company.

But mastering the recovery process happens before disaster strikes. This is especially true for large datasets. Our breakdown is here to help you along the way. We also have an even more detailed walkthrough for how to back up large datasets.

Large datasets have lots of variables to consider when figuring out the ‘how’ of recovery. After all, recovery doesn’t happen with the flip of a switch. Success is measured by retrieving mission critical files in the right order so your business can get back to business.

5 essential questions to ask before backing up large datasets

IT pros know that a successful recovery takes trial and error, and even a bit of finesse. And with many things in life, a bit of preparation can save a lot of downtime. So before you start, ask yourself these questions:

- What’s my company’s document retention policy? (And don’t forget regulatory requirements like GDPR)

First, you need to ensure you satisfy your company’s retention policy and that you’re in compliance with any regulatory requirements when choosing what to backup. Before sifting through your data and making hard decisions about what to protect, you need to take this important step to make sure you don’t run afoul of legislation or regulations.

- Which data is mission critical to the business?

Once in full compliance with company policies and regulations, it’s time to highlight any data that affects the operations or the financial health of the business. Identifying mission critical data allows you to prioritize backup tasks based on desired recovery options.

You can also exclude data that isn’t mission critical and isn’t covered by regulations from regular backup scheduling. Any bandwidth you save now will give you added flexibility when you make it to the last step.

- What types of data do I have (and can I compress it)?

Data is more than 1s and 0s. Some datasets have more redundancy than others, making them easier to compress while images, audio and video tend to have less redundancy. Your company might have a lot of incompressible images leading you to utilize snapshot or image backup. This allows you to move large datasets over a network more efficiently without interrupting critical workflows.

- How frequently do my data change?

The rate of change for your data will determine the size of your backups and help you figure out how long it will take to recover. That’s because once you have an initial backup and complete the dedupe process, backups only need to record the changes to your data.

Anything that doesn’t change will be recoverable from the initial backup. Even with a very large dataset, if most of your data stays static then you can recover from a small disruption very quickly. But no matter the rate of change, anticipating how long it will take to recover critical data informs your business continuity plans.

- What size backup will my network support?

Bandwidth capacity is a common denominator for successful recoveries. It’s important to remember that you can only protect as much data as your network will allow. Using all your bandwidth to make daily backups can grind business to a halt. This is where your preparation can help the most.

Once you’ve answered the first four questions, you should know which data need to be accessible at any hour of the day. You can protect this data onsite with a dedicated backup appliance to give you the fastest recovery times. Of course, you’ll still have this data backed up offsite in case a localized disaster strikes.

Money matters

IT assets cost money and often represent large investments for businesses. New technologies bring advancements in business continuity but can also add complications. And to top it all off, IT ecosystems increasingly must support both legacy technology and new systems.

Some vendors are slow to adapt new pricing models that fit with emerging technologies. They add on excessive overage charges and ‘per instance’ fees. This adds costs as businesses scale up their environments – more servers, databases and applications increasingly escalate prices.

Finding the right partner

That’s why it’s so important to work with a vendor that offers unlimited licensing. You’re empowered to protect what you need and grow your business without worrying about an extra cost. Most importantly, businesses shouldn’t have to skimp on protection because of an increase in price.

Time to get started

Protecting large datasets goes beyond just flipping a switch. Preparation and careful consideration of your data will help you land on a strategy that works for your business.

Interested in learning more about Carbonite backup plans?

Explore our industry leading solutions and start a free trial to see them in action.

2022: The threat landscape is paved with faster and more complex attacks with no signs of stopping

2020 may have been the year of establishing remote connectivity and addressing the cybersecurity skills gap, but 2021 presented security experts, government officials and businesses with a series of unprecedented challenges. The increased reliance on decentralized connection and the continued rapid expansion of digital transformation by enterprises, small to medium-sized businesses (SMBs) and individuals, provided cybercriminals with many opportunities to exploit and capitalize on unsuspecting businesses and individuals. With nothing short of a major financial windfall waiting in the midst, numerous organizations and individuals fell victim to the mischievous efforts of malicious actors.

Threats abound in 2021

In 2021, we witnessed so many competing shifts, many of which we detailed early on in our 2021 BrightCloud® Threat Report. In particular, we witnessed an increase in distributed denial of service (DDoS) attacks and a surge in the usage of the internet of things (IoT). For enterprises, SMBs and individuals that entrust IoT devices for work and entertainment, this opens up vulnerabilities to malicious vectors that take advantage of unprotected blind spots and wreak havoc.

The cybercrime marketplace also continued to get more robust while the barrier to entry for malicious actors continued to drop. This has created a perfect breeding ground for aspiring cybercriminals and organized cybercrime groups that support newcomers with venture capitalist-style funding.

Suffice to say, a lot has been happening at once.

Below, our security experts forecast where the main areas of concern lie in the year ahead.

Malware

Malware made leaps and bounds in 2021. In particular, six key threats made our list. These dark contenders include LemonDuck, REvil, Trickbot, Dridex, Conti and Cobalt Strike.

“In 2022, the widespread growth of mobile access will increase the prevalence of mobile malware, given all of the behavior tracking capabilities,” says Grayson Milbourne, security intelligence director, Carbonite + Webroot, OpenText companies. Malicious actors will continue to improve their social engineering tactics, making it more difficult to recognize deception and make it increasingly easier to become a victim, predicts Milbourne.

Ransomware

Earlier in 2021, we detailed the hidden costs of ransomware in our eBook. Many organizations when faced with an attack, gave into the demands of threat actors, paying hundreds of thousands of dollars on average. Since mid-October 2021, there have been more than 25 active strains of ransomware circulating. The evolution of ransomware as a service (RaaS) has vastly proliferated. Conti, in particular, continues to be the more prevalent ransomware affecting SMBs.

“As the year progresses, we will likely see faster times to network-wide deployment of ransomware after an initial compromise, even in as little as 24 hours,” says Milbourne.

“Stealth ransomware attacks, which would deploy all the necessary elements to control, exfiltrate and encrypt key assets of an organization but do not execute until there is no alternative, will likely continue to proliferate,” says Matt Aldridge, principal solutions consultant at Carbonite + Webroot. “This approach will be used to get around restrictions on reporting and on ransomware payments. Criminals can extort their targets based on the impending threat of ransomware without ever having to encrypt or exfiltrate the data. This could lead to quicker financial gains for criminals, as organizations will be more willing to pay to avoid generating awareness, experiencing major downtime or incurring data protection fines,” forecasts Aldridge.

Cryptocurrency

There was no shortage of discussion surrounding cryptocurrency and its security flaws. The rise of exchange attacks grew, and quick scams reigned. The free operation of cryptocurrency exchanges and marketplaces will be significantly impacted by government regulation and criminal investigation in 2022, especially in the United States.

“This year, we will likely see new threat actors become strategic in their cost-benefit analysis of undertaking long-term mining versus short-term ransomware payments. The focus will likely fall to Linux and the growth of manipulation of social media platforms to determine price,” predicts Kelvin Murray, senior threat researcher, Carbonite + Webroot.

Supply chain

“Simply put, attacks on the supply will never stop; it will only get worse,” says Tyler Moffitt, senior security analyst at Carbonite + Webroot. Each year the industry gets increasingly stronger and more intelligent. Yet every year, we witness more never-before-seen attacks and business leaders and security experts are constantly looking at each other thinking, “I’m glad it wasn’t us in that supply chain attack,” continues Moffitt.

General Data Protection Regulation (GDPR) fines have more than doubled since they came out a few years ago just as ransom amounts have increased. These fine values have also been promoted on leak sites. Moffitt predicts GDPR will continue to increase their fines, which may serve to help, instead of thwart, the threat of ransomware extortion.

Phishing

Last year, we forecasted phishing would continue to remain a prevailing method of attack, as unsuspecting individuals and businesses would fall victim to tailored assaults. In our mid-year BrightCloud® Threat report, we found a 440% increase in phishing, holding the record for the single largest phishing spike in one month alone. Industries like oil, gas, manufacturing and mining will continue to see growth in targeted attacks. Consumers also remain at risk. As more learning, shopping and personal banking is conducted online, consumers could face identity and financial theft.

What to expect in 2022?

The new year ushers in a new wave of imminent concerns. In 2022, we expect to see an increased use of deepfake technology to influence political opinion. We also expect business email compromise (BEC) attacks to become more common. To make matters worse, we also foresee another record-breaking year of vulnerability discovery which is further complicated by bidding wars between bug bounty programs, governments and organized cybercrime. Most bug bounties pay six figures or less, and for a government or a well-funded cybercrime organization, paying millions is not out of reach. Ultimately, this means more critical vulnerabilities will impact individuals and businesses. The early days of 2022 will also be compounded by the discovery of Log4j bugs hidden within Java code.

“The critical vulnerability identified within Log4Shell is a great example of how attackers can remotely inject malware into vulnerable systems. This active exploitation is happening as we speak,” says Milbourne.

The key to preparing for the plethora of attacks we will likely witness in 2022 is to establish cyber resilience.

Whether you’re looking to protect your family, business or customers, Carbonite + Webroot offer the solutions you need to establish a multi-layer approach to combating these threats. By adopting a cyber resilience posture, individuals, businesses small and large can mitigate risks in the ever-changing cyber threat landscape.

Experience our award-winning protection for yourself.

To learn more about Carbonite and begin your free trial, please click here.

To discover Webroot’s solutions for yourself, begin a free trial here.

Season’s cheatings: Online scams against the elderly to watch out for

Each year, as online shopping ramps up in the weeks before the holidays, so do online scams targeting the elderly. This season – in many ways unprecedented – is no different in this regard. In fact, COVID-19, Zoom meetings, vaccination recommendations and travel warnings all provide ample and unique precedent for social engineering attacks.

Not surprisingly, cybercriminals often target those least able to protect themselves. This could be those without antivirus protection, young internet users or, unfortunately, your elderly loved ones. The FBI reported nearly $1 billion in scams targeting the elderly in 2020, with the average victim losing nearly $10,000.

This holiday season, it may be worth talking to elderly relatives about the fact that they can be targeted online. Whether they’re seasoned, vigilant technology users or still learning the ropes of things like text messaging, chat forums, email and online shopping, it won’t hurt to build an understanding of some of the most common elder fraud scams on the internet.

The most common types of online elder fraud

According to the FBI, these are some of the most common online scams targeting the elderly. While a handful of common scams against older citizens are conducted in person, the majority are enabled or made more convincing by the use of technology.

- Romance scams: Criminals pose as interested romantic partners on social media or dating websites to capitalize on their elderly victims’ desire to find companions.

- Tech support scams: Criminals pose as technology support representatives and offer to fix non-existent computer issues. The scammers gain remote access to victims’ devices and sensitive information.

- Grandparent scams: Criminals pose as a relative—usually a child or grandchild—claiming to be in immediate financial need.

- Government impersonation scams: Criminals pose as government employees and threaten to arrest or prosecute victims unless they agree to provide funds or other payments.

- Sweepstakes/charity/lottery scams: Criminals claim to work for legitimate charitable organizations to gain victims’ trust. Or they claim their targets have won a foreign lottery or sweepstake, which they can collect for a “fee.”

All of the above are examples of “confidence scams,” or ruses in which a cybercriminal assumes a fake identity to win the trust of their would-be victims. Since they form the basis of phishing attacks, confidence scams are very familiar to those working in the cybersecurity industry.

While romance scams are a mainstay among fraud attempts against the elderly, more timely methods are popular today. AARP lists Zoom phishing emails and COVID-19 vaccination card scams as ones to watch out for now. Phony online shopping websites surge this time of year, and are becoming increasingly believable, according to the group.

Tips for preventing online elder scams

Given that the bulk of elder scams occur online, it’s no surprise that several of the FBI’s top tips for preventing them involve some measure of cyber awareness.

Here are the FBI’s top tips:

- Recognize scam attempts and end all communication with the perpetrator.

- Search online for the contact information (name, email, phone number, addresses) and the proposed offer. Other people have likely posted information online about individuals and businesses trying to run scams.

- Resist the pressure to act quickly. Scammers create a sense of urgency to produce fear and lure victims into immediate action. Call the police immediately if you feel there is a danger to yourself or a loved one.

- Never give or send any personally identifiable information, money, jewelry, gift cards, checks, or wire information to unverified people or businesses.

- Make sure all computer anti-virus and security software and malware protections are up to date. Use reputable anti-virus software and firewalls.

- Disconnect from the internet and shut down your device if you see a pop-up message or locked screen. Pop-ups are regularly used by perpetrators to spread malicious software. Enable pop-up blockers to avoid accidentally clicking on a pop-up.

- Be careful what you download. Never open an email attachment from someone you don’t know and be wary of email attachments forwarded to you.

- Take precautions to protect your identity if a criminal gains access to your device or account. Immediately contact your financial institutions to place protections on your accounts. Monitor your accounts and personal information for suspicious activity.

Pressure to act quickly is a hallmark of social engineering scams. It should set off alarm bells and it’s important to let older friends or family members know that. Using the internet as a tool to protect yourself, as recommended by the second bullet, is also a smart play. But more than anything, don’t overlook the importance of helping senior loved ones install an antivirus solution on their home computers. These can limit the damage of any successful scam in important ways.

Don’t wait until it’s too late. Protect the seniors in your life from online scams this holiday season. You might just save them significant money and hassle.

We have just the tool to do it, too. Discover our low-maintenance, no-hassle antivirus solutions here.

MSP to MSSP: Mature your security stack

Managed service providers (MSPs) deliver critical operational support for businesses around the world. As third-party providers of remote management, MSPs are typically contracted by small and medium-sized businesses (SMBs), government agencies and non-profit organizations to perform daily maintenance of information technology (IT) systems.

Similar to an MSP, managed security service providers (MSSPs) offer comparable organizations security management of their IT infrastructure, but are also enlisted to detect, prevent and respond to threats. An MSSP’s security expertise allows organizations that may not have the resources or talent to securely manage their systems and respond to an ever-evolving threat landscape.

Dark forces are increasing

The rise of ransomware, malware and other malicious vectors has transformed the threat landscape. According to our Hidden Costs of Ransomware report, 46% of businesses said their clients were impacted by an attack. A single cyber attack could trigger as much as $80 billion in economic losses across numerous SMBs, not to mention the ongoing supply chain attacks that stand to cripple an MSP’s business. With all this in mind, many MSPs have considered evolving into an MSSP provider, but at what cost?

Competitive advantage and financial gain

Some of the driving forces fueling MSPs towards this security-infused business model are revenue generation and market share. With the global managed security services market expected to balloon to over 65 billion USD within the next five years, becoming an MSSP has many tangible benefits. MSPs have the chance to extend their current offerings, fueling additional benefits for customers and potential growth to their customer base at the SMB and mid-enterprise level.

How to get there

To be considered an MSSP, an MSP needs to secure high availability security operations centers (SOCs) to enable 24/7/365 always-on security for their customers’ IT devices, systems and infrastructure. SOCs are comprised of highly skilled professionals. These professionals are trained to detect and mitigate threats that could negatively impact a customer’s data centers, servers or endpoints.

MSPs can take three approaches towards establishing MSSP offerings:

- Build. MSPs considering this route will need to evaluate the cost and time associated with establishing its MSSP operations from the ground up. This requires a lot of money, time and resources to hire and train security personnel. These trained individuals must be capable of constant monitoring and regular calibration to ensure their customer’s systems are protected.

“Only a handful of MSPs in the industry have been able to transition themselves into MSSPs. The lack of bandwidth and resources needed to address compliance issues keep many MSPs at bay. The transition is incredibly resource-intensive,” says George Anderson, product marketing director at Carbonite + Webroot, OpenText companies.

- Buy. Opting to purchase an existing MSSP provider can enable an MSP to leverage current customers, processes and talent to service its existing customer base with the added benefit of providing data and network security. Purchasing an existing provider also allows MSSPs to extend their security offerings to a newly acquired set of customers. However, with little regulation, MSPs must do their due diligence to ensure they are purchasing a well-equipped provider.

- Partner. One of the most efficient options for an MSP to pursue is partnering with an existing well-established MSSP. This allows an MSP to capitalize on the existing partner’s security expertise without having to develop the initial financial resources or technical expertise to support the creation and maintenance of its SOCs.

“MSPs contemplating the move to an MSSP business model should consider the value of a partnering strategy with a well-known security provider. By partnering with an existing MSSP, an MSP will be able to securely protect its customer IT infrastructure and provide timely responses after hours to ensure efficient detection and response,” says Shane Cooper, manager, channel sales at Carbonite + Webroot.

Transition to MSSP: risk or reward?

Transitioning from MSP to MSSP brings with it a series of quantifiable benefits. However, MSPs need to consider the size and scalability of service offerings they can provide, not to mention the costs associated with initially building their services or acquiring them from another provider. Partnering with a seasoned security provider allows MSPs to maintain their customer base while tapping into the resources and talent of a skilled and experienced provider.

“Many customers may be unaware of the quality of their SOC provider. MSPs transitioning into an MSSP may lack the proper resources and talent to respond to threats. It pays to optimize your investment with a security stack that brings the robust service and security elements together,” says Bill Steen, director, marketing at Carbonite + Webroot.

Webroot offers an MDR solution powered by Blackpoint Cyber, a leading expert in the industry. Webroot’s turnkey MDR solution has been developed by world-class security experts and is designed to enable 24/7/365 threat hunting, monitoring and remediation.

Optimize and mature your security stack with a provider you can trust. Secure your stack with Webroot.

To learn more about why partnering with Webroot can help your business and support your customers, please visit https://www.webroot.com/ca/en/business/partners/msp-partner-program

‘Tis the season for protecting your devices with Webroot antivirus

As the holiday season draws near, shoppers are eagerly searching for gifts online. Unfortunately, this time of year brings as much cybercrime as it does holiday cheer. Especially during the holidays, cybercriminals are eager to exploit and compromise your personal data. Even businesses large and small are not immune to the dark forces at work. Whether you purchase a new device or receive one as a gift, now is the time to consider the importance of protecting it with an antivirus program.

What is antivirus?

Antivirus is a software program that is specifically designed to search, prevent, detect and remove software viruses before they have a chance to wreak havoc on your devices. Antivirus programs accomplish this by conducting behavior-based detection, scans, virus quarantine and removal. Antivirus programs can also protect against other malicious software like trojans, worms, adware and more.

Do I really need antivirus?

In a word, yes. According to our 2021 Webroot BrightCloud Threat Report, on average, 18.8% of consumer PCs in Africa, Asia, the Middle East and South America were infected during 2020.

Antivirus software offers threat protection by securing all of your music files, photo galleries and important documents from being destroyed by malicious programs. Antivirus enables users to be forewarned about dangerous sites in advance. Antivirus programs also scan the Dark Web to determine if your information has been compromised. Comprehensive antivirus protection will also provide password protection for your online accounts through secure encryption.

Benefits of antivirus

By investing in antivirus protection, you’ll be able to maintain control of your online experience and best of all, your peace of mind.

Webroot offers three levels of antivirus protection. Our Basic Protection protects one device. You can rest easy knowing that your device, whether it’s a PC or Mac, will be protected. With lightning-fast scans, this line of defense offers always-on protection to safeguard your identity. Our real-time anti-phishing also blocks bad sites.

Looking to protect more than one device? We’ve got you covered. Our Internet Security Plus with AntiVirus offers all of the same great features as our basic protection but with the added bonus of safeguarding three devices. You’ll also have the ability to secure your smartphones, online passwords and enable custom-built protection if you own a Chromebook.

For the ultimate all-in-one defense, we offer Internet Security Complete with AntiVirus, which protects five devices. Enjoy all the same features as our Basic and Internet Security Plus with AntiVirus but take advantage of 25G of secure online storage and the ability to eliminate traces of online activity.

Keep the holidays merry and bright

Safeguard all of your new and old devices with Webroot. Bad actors will always be hard at work trying to steal your personal information. Protect yourself and your loved ones by investing in antivirus protection.

Webroot offers complete protection from viruses and identity theft without slowing you down while you browse or shop online.

Experience our award-winning security for yourself.

To learn more about how Webroot can protect you, please visit https://www.webroot.com/us/en

Making the case for MDR: An ally in an unfriendly landscape

Vulnerability reigns supreme

On Oct. 26, we co-hosted a live virtual event, Blackpoint ReCON, with partner Blackpoint Cyber. The event brought together industry experts and IT professionals to discuss how security professionals can continue to navigate the modern threat landscape through a pragmatic MDR approach. During the event, we learned how the increase in ransomware attacks underscores the value of a robust defense and recovery strategy.

A recent string of notable attacks including Microsoft Exchange, Kaseya, JBS USA, SolarWinds and the Colonial Pipeline, have clearly demonstrated that businesses and critical infrastructure are under assault. The spike in sophistication and speed of attacks has even caught the attention of the White House. It issued an Executive Order in May 2021, calling on the private sector to address the continuously shifting threat landscape.

For small to medium-sized businesses (SMBs) and managed service providers (MSPs), addressing these threats is made more difficult by resource-strapped teams at mid-sized organizations and budgetary constraints at small businesses.

Addressing ongoing SMB and MSP challenges

SMBs, unlike enterprise-level organizations, often suffer from a lack of adequate resources to effectively manage, detect and respond to ongoing security threats before they become full-blown attacks with dire consequences for continuity and productivity.

“Small businesses remain a prime target for threat actors. With minimal margins and few resources, one cyberattack could put a SMB out of business in a matter of days,” says Tyler Moffitt, senior security analyst at Carbonite + Webroot, OpenText companies.

For MSPs, their mid-market customers may not be at the scale or size of an enterprise to respond effectively to cyber threats. They may require additional resources to help boost defense infrastructure among customers. This leaves SMBs and MSP clients more vulnerable to attacks with the potential to cripple their business operations.

SMBs and MSPs don’t have to approach the evolving threat landscape alone. Managed detection and response (MDR) offers a reliable defense and response approach to cyber threats.

What is MDR?

Managed detection and response is a proactive managed cyber security approach to managing threats and malicious activity that empowers organizations to become more cyber resilient.

Carbonite + Webroot, OpenText companies, offers two new MDR options for customers looking for a threat detection and response system that meets their specific needs:

- Webroot MDR powered by Blackpoint is a turnkey solution developed by world-class security experts to provide 24/7/365 threat hunting, monitoring and remediation. Guided by a board of former national security leaders and an experienced MDR team, Webroot MDR constantly monitors, hunts and responds to threats.

- OpenText MDR is designed for SMBs with specific implementation and integration requirements determined by their business and IT environments. Backed by AI-powered threat detection, award-winning threat intelligence and a 99% detection rate, this MDR solution gives your business the ability to remain agile.

Having a MDR solution can:

- Reduce the impact of successful attacks

- Minimize business operations and continuity

- Boost the ability to become cyber resilient

- Achieve compliance with global regulations

- Bolster customer confidence

In our 2020 Webroot Threat Report, we found that phishing URLs increased by 640% last year. Similar attacks, business email comprise (BEC) for instance, are a major scam malicious actors use to lure unsuspecting end users. BEC attacks have cost organizations almost 1.8 billion in losses, according to FBI reports. MDR helps to reduce costs and secure an organization’s overall security program investment.

In today’s ever-evolving threat landscape, no business can go without a proactive security program. As threat actors become increasingly more complex, their impact to SMBs and MSP customers becomes more severe. To prepare, manage and recover from threats, SMBs and MSPs should consider joining forces with a trusted partner to help boost their customer’s overall protection and remain prepared to tackle whatever threats may impact business continuity.

To learn more about how Webroot can empower your business and get your own MDR conversation started, get in touch with us here.

Shining a light on the dark web

Discover how cybercriminals find their targets on the dark web:

For the average internet user, the dark web is something you only hear about in news broadcasts talking about the latest cyberattacks. But while you won’t find yourself in the dark web by accident, it’s important to know what it is and how you can protect yourself from it. Afterall, the dark web is where most cybercrimes get their start.

The dark web explained

In short, the dark web is a sort of online club where only the members know the ever-changing location.

Once a criminal learns the location, they anonymously gain access to sell stolen information and buy illicit items like illegally obtained credit cards.

Innovations in the dark web

The dark web isn’t just a marketplace, though. It’s also a gathering area where criminals can recruit each other to help with their next attack.

In fact, the rising rates of malware and computer viruses can partially be explained by cyber criminals coming together to pool their talent. They’ve created a new model for cybercrime where criminal specialists sell their talents to the highest bidder. Criminals might even loan out new technology with the promise that they get a portion of any stolen funds.

Protecting yourself and your family

The first step in protecting yourself from criminals in the dark web is to have a plan. The right cybersecurity tools will keep your important financial documents and your most precious memories safe from attack – or even accidental deletion.

And while cybercriminals are developing new methods and tools, cybersecurity professionals are innovating as well. Strategies for cyber resilience combine the best antivirus protection with state-of-the-art cloud backup services, so you’re protected while also prepared for the worst.

Ready to take the first step in protecting you and your family from the dark web?

Ransom hits main street

Cybercriminals have made headlines by forcing Fortune 500 companies to pay million-dollar ransom payments to retrieve their data and unlock their systems. But despite the headlines, most ransomware targets families as well as small and medium sized businesses.

In fact, the average ransom payment is closer to $50,000. And it makes sense – just like it is for common criminals, it’s easier to steal a purse than it is to rob millions from a bank.

Targeted by ransomware

Ransomware uses modern technology and cutting-edge tools to do something that feels decidedly old fashioned – steal from you. It’s a modern day grift, where criminals take something that you value and will only give it back in exchange for money

In the modern age, it looks like this: cybercriminals break into your device and lock away your most valuable files. They want to disrupt your life and your business so much that you’re willing to pay the cybercriminals to give back your most important files.

Ransomware tactics

“Their goal is disruption. How can your business operate if all the computers are locked up?” explains Grayson Milbourne, security intelligence director for Carbonite + Webroot. And businesses aren’t the only target.

Families might lose access to years of photos and videos because of a ransomware attack. That’s because criminals know that families are willing to pay to keep years’ worth of precious memories.

Of course, cybercriminals have added a new layer to their crimes. Now, instead of destroying your files if you don’t pay them, they’ll sell your files on the dark web. This way victims are even more likely to pay because they could lose passwords, business data and personal information.

How to fight back.

Cybercriminals aren’t the only ones using new technology, though. Cybersecurity experts are developing new tools for keeping cybercriminals out of your business and personal life. Of course, the first step to protecting you or your business is adopting a cybersecurity tool that protects your files and makes backups in case of emergency.

With safeguards in place, you won’t have to pick between losing your files and your privacy or paying cybercriminals.

Ready to take the first in protecting your most precious memories and most important documents?

3 reasons even Chromebook™ devices benefit from added security

Google Chromebook™ devices could rightly be called a game-changer for education. These low-cost laptops are within financial reach for far more families than their more expensive competitors, a fact that proved crucial with the outbreak of the COVID-19 pandemic at the beginning of last year.

During that period, Google donated more than 4,000 Chromebook devices to California schools and the sale of the devices surged, outselling Macs for the first time. They made remote learning possible for thousands of students who otherwise could have been quarantined without connections to the classroom. According to Google, 40 million students and educators were using Chromebook computers for learning as of last year.

Momentum is unlikely to slow anytime soon, especially since the Chrome operating system will now be the first many students are exposed to. The respected technology blog TechRadar has even referred to 2021 as “the year of the Chromebook.”

As a cybersecurity company, we naturally wonder what widespread use of Chromebook devices means for the online security of the general public. The good news is Chromebook security is pretty good compared to other devices and operating systems. Some interesting features like frequent sandboxing, automatic updates and “verified boot” go a long way to improve Chromebook security.

But the fact is, even Chromebook computers benefit from supplemental security. Here are a few of the reasons why.

- Users, especially new ones, make mistakes

There are several common user errors that put users, their personal information and their devices at risk. Many third-party security solutions are designed to account for exactly this type of behavior. Even strong security can’t prevent an account from being hacked if account credentials are stolen in a phishing attack, one of the most common causes of identity theft.

In 2020, phishing scams spiked by 510 percent between January and February alone. Scammers used the beginning of the pandemic to spoof sites like eBay, where in-demand goods were being bought and sold. In March, as lockdown went into full effect, attackers began targeting users of YouTube, HBO and Netflix at unprecedented rates.

In short, phishing scammers use current events to target vulnerable users, like those who are inexperienced, compulsive or still developing critical thinking skills – traits that apply to many school-aged children.

To combat phishing scams, it helps to have filters that can proactively alert users if there’s a high chance that a form field or website is likely to steal credentials. Security companies can do this by determining the likelihood a site isn’t what it seems based on its connection to other dishonest sites. This information, known as threat intelligence, can help proactively warn when a user may be headed for danger.

2. Fake apps are still cause for concern

There are plenty of examples of bad apps and sketchy Chrome extensions being downloaded from the Google Play Store. They vary in their seriousness from annoying, like constantly pushing ads to young users, to serious, like serving banking Trojans that target users’ personal financial information.

The Chromebook sandboxing feature will defend against many of these so-called “malicious apps” from invading devices through things like popular mobile games, but some will likely find ways to avoid the feature.

In the same way that threat intelligence can help proactively determine if a site is likely to be a vehicle for phishing attacks, it can also help determine if an app is likely to be malware disguised as an app based on how closely its related to other malware on the web.

3. Web-borne malware remains widespread

The internet is littered with unsafe websites that host viruses, malware, ransomware and other online threats. Some can slip spyware – malware that tracks a user’s online movements – onto devices without a user, especially an inexperienced internet user, noticing.

The Chromebook verified boot feature can help to disable these threats – if a user knows they’ve got one on their device. But many types of malware aren’t immediately obvious. They can operate in the background, perhaps collecting data on user’s habits or logging their keystrokes to try to steal passwords or other sensitive information.

Here again, warning users of threats in advance can make the difference between addressing an infection and avoiding one altogether. By providing advanced warning of a risky website or a suspect browser extension, a good antivirus solution can stop an infection before it happens. Think of it like maintaining a healthy immune system through diet and exercise to keep from coming down from the common cold.

Protecting vulnerable users from internet threats

It’s hard to be too cautious on the web, especially with users who are just starting to use it to study, learn and explore. There are security gaps in any operating system, so it helps to layer defenses against multiple types of threat.

When facing dangers like identity theft and spyware disguised as an addicting mobile game, it helps to have a little insider information on the “bad neighborhoods” of the internet.

Even with great device security, that’s the helpful information Chromebook users miss out on without installing a strong third-party antivirus solution.

Resilience lies with security: Securing remote access for your business

Remote access has helped us become more interconnected than ever before. In the United States alone, two months into the pandemic, approximately 35% of the workforce was teleworking. The growth of remote access allowed individuals to work with organizations and teams they don’t physically see or meet.

However, the demand for remote access has critical implications for security. Businesses now more than ever are expected to strike a balance between providing reliable remote access and properly securing it. Striking this balance also gives businesses the opportunity to retain customer loyalty and maintain a positive brand reputation. According to one study by Accenture, over 60% of consumers switched some or all of their business from one brand to another within the span of a year. Needless to say, securing remote access has major implications for business productivity and customer retention.

What is secure remote access?

Simply put, secure remote access is the ability to provide reliable entry into a user’s computer from a remote location outside of their work-related office. The user can access their company’s files and documents as if they were physically present at their office. Securing remote access can take different forms. The most popular options include virtual private network (VPN) or remote desktop protocol (RDP).

VPN works by initiating a secure connection over the internet through data encryption. Many businesses offer workers the opportunity to use this method by providing organizational connectivity through a VPN gateway to access the company’s internal network. One downside of using a VPN connection involves vulnerability. Any remote device that gains access to the VPN can share malware, for example, onto the internal company network.

RDP, on the other hand, functions by initiating a remote desktop connection option. Through the click of a mouse, a user can access their computer from any location by logging in with a username and password. However, activating this default feature opens the door to vulnerabilities. Through brute force, illegitimate actors can attempt to hack a user’s password by trying an infinite number of combinations. Without a lockout feature, cybercriminals can make repeated attempts. “This is where length of strength comes into play. It is important to have as many characters as possible within your password, so it’s harder for cybercriminals to crack,” says Tyler Moffitt, security analyst, Carbonite + Webroot, OpenText companies.

Overcoming obstacles

While the steps for securing remote access are simple, the learning curve for adoption may not be. Users, depending on their experience, may feel reluctant to learn another process. However, education is critical to maintaining a business’ security posture, especially when it comes to ransomware.

“The most common way we see ransomware affecting organizations – government municipalities, healthcare and education institutions – is through a breach. Once a cybercriminal is remoted onto a computer, it’s game over as far as security is concerned,” added Moffitt.

Benefits

The primary benefit of securing remote access is the ability to connect, work and engage from anywhere. A secure connection offers users the chance to work in locations previously not possible.

“The workplace will never be the same post-COVID. As more clients continue to maintain flexible working arrangements, it becomes even more important to secure clients remotely,” says Emma Furtado, customer advocacy manager, Carbonite + Webroot.

Adopting secure remote access also supports the maintenance of client satisfaction, overcoming reluctance and building brand advocacy.

“Carbonite + Webroot Luminaries, a group of managed service providers (MSPs), rely on their clients’ cyber resilience – and trust – to grow their businesses. After implementing Webroot products, many of their clients are open to multiple forms of secure remote access, such as VPN,” Furtado added.

Advice for organizational adoption

- Test, test, test. Like many applications, ongoing maintenance is key. Conducting frequent connection and penetration testing is important to ensure constant viability for users.

- Two-factor authentication. Whether it’s via email or text message, this additional security layer should be embedded within an organization’s remote access protocols.

- Document your procedures. Develop a standardized policy across your organization to ensure users understand the expectations surrounding remote access. This helps to build security awareness among users, which lessens the likelihood they will adopt shadow IT.

Embracing remote work with reliability and safety in mind

Securing remote access allows businesses to save money, reduce pressure on internal teams and protect intellectual property. As part of a robust cyber resilience strategy, businesses should prioritize developing the necessary backup, training, protection and restoration elements that will help maintain business continuity and enhance customer loyalty and trust.

To start your free Webroot® Security Awareness Training, please click here.

To learn more Webroot® Business Endpoint Protection, please click here.

NIST and No-notice: Finding the Goldilocks zone for phishing simulation difficulty

Earlier this year, the National Institute for Standards and Technology (NIST) published updated recommendations for phishing simulations in security awareness training programs. We discussed it on our Community page soon after the updated standards were released, but the substance of the change bears repeating.

“Practical exercises include no-notice social engineering attempts to collect information, gain unauthorized access, or simulate the adverse impact of opening malicious email attachments or invoking, via spear-phishing attacks, malicious web links.” – NIST SP 800-53, Rev. 5, Section 5.3 (pg. 60)

This update includes a recommendation for “no-notice” phishing simulations to be delivered at the beginning of security awareness training programs to more accurately gauge the readiness of a set of users to recognize a phishing attempt.

The thinking obviously being that letting users in on the phishing simulation game will heighten suspicion of their inbox and skew baseline results. This concern can be thought as a spin-off of the well-studied “Observer Effect” known in many scientific fields; observing the behavior of something necessarily changes that behavior.

While it might be tempting for a Chief Information Security Officer (CISO) or other IT professional to take high grades on a phishing simulation a sign of a job well done, that can be a dangerous conclusion to draw. Phishing tests that are too easy do little to address a problem that’s become one of the most common methods of entry for ransomware attacks.1 If IT professionals grade on a curve here, they’re doing very little to improve their organization’s overall cyber resilience.

Combatting this false sense of confidence about users’ ability to spot phishing attacks requires making sure simulations aren’t too easy to spot.

What makes a phishing simulation too easy?

After putting some thought into that question, NIST researchers published a paper last year in the Journal of Cybersecurity citing three key criteria for determining if a phishing simulation makes for good training.

According to the authors, “low click rates do not necessarily indicate training effectiveness and may instead mean the phishing emails” were:

- Too obvious – Either errors were too overt or these templates were running something akin to the Nigerian Prince scam. Either way, they won’t help an employee overcome today’s more sophisticated phishing attempts

- Not relevant to staff – We’re all busy at work. So deleting an email offering 25% off at Ed’s Golf Cart Repair Shop doesn’t mean a user is an expert at spotting scams. It just means there was nothing in the simulation that enticed anyone to click.

- The phish was repeated or similar to one that was – Phish me once, shame on me…but seriously, this drives home the importance of having a wide range of phishing templates. These programs work best when they’re ongoing, so it’s important to switch it up.

On the other hand, a phishing simulation is convincing if it does the following to some degree:

- Mimics a workplace process or practice

- Has workplace relevance

- Aligns with other situations or events, including those external to the workplace

- Presents consequences for NOT clicking (e.g., buy gift cards or we lose the client)

- References targeted training, specific warnings or other exposure

Tip: NIST has devised a weighted version of this scale, “the phish scale,” you can use to determine the difficulty of your simulations. A phishing simulation that has all of the above characteristics would be considered extremely difficult. That’s good, right?

Too much difficulty can be dangerous, too

Any security awareness training program that’s too difficult is liable to leave learners feeling put off, resigned to failure, or worse, coming away without any practical security learnings. This is especially true if users are punished too harshly for failing to spot a difficult phishing simulation.

Any program that’s both difficult and relying on a stick rather than a carrot for motivation runs the risk of:

- Reinforcing negative stereotypes of security training programs

- Encouraging employees to “game” the system by sharing information about tests

- Fostering animosity towards the organization’s overall security posture

- Inviting legal trouble from dissatisfied employees

For security awareness training to be successful, it has to be collaborative. Learners should feel like they’re part of something constructive, rather than just subjected to another type of performance review.

Hitting the sweet spot

Finding the appropriate difficulty level for phishing simulations is one of the reasons the initial, no-notice NIST recommendation is so important. It helps administrators establish baseline results that most accurately reflect users’ real understanding of phishing attacks. But we don’t recommend a training program be hidden from employees forever.

Instead, after initial results have been established, it’s better to announce the program publicly along with its goals, evaluation criteria and a point of contact for those interested in learning more. Once users are in the know, subsequent phishing simulations can focus on incremental improvements over the baseline results. As scores rise across the board, the difficulty can be gradually increased over time.

One essential recommendation: Always report publicly on positive results. Let users know they’re managing to catch more and more difficult simulations. Be as specific as possible, as in, “click-through rates dropped from A to B in this exercise.” This will help establish a sense of shared responsibility for organizational security and “gamify” the experience.

Calibrating your security awareness training is an ongoing experience. Don’t be afraid to adjust your simulations based on results. Happy learning.

Ready to establish your own successful security awareness training? Try us out free for 30 days.

1. Hiscox. “Cyber Readiness Report 2021.” (April 2021)

Survey: How well do IT pros know AI and machine learning?

What do the terms artificial intelligence and machine learning mean to you? If what comes to mind initially involves robot butlers or rogue computer programs, you’re not alone. Even IT pros at large enterprise organizations can’t escape pop culture visions fed by films and TV.

But today, as cyberattacks against businesses and individuals continue to proliferate, technologies like AI and ML that can drastically improve threat detection, protection and prevention are critical. This is even more true as workforces continue to operate remotely in such numbers.

That’s why, for a few years now, we’ve been conducting surveys of IT professionals to determine their familiarity with, and attitudes toward, artificial intelligence (AI) and machine learning (ML). For the purposes of this report, we surveyed IT decision-makers at enterprises (1000+ employees), small and medium-sized businesses (<250 employees), and consumers (home users) throughout the U.S., U.K., Japan, and Australia/New Zealand.

As a result, we learn about:

- Baseline cyber hygiene, including what cybersecurity tools are in use and how they’re used

- General experience with data breaches and attitudes toward the safety of their data

- How many organizations use cybersecurity tools with AI components

- Whether IT admins feel that AI actively contributes to the safety of their organizations or is marketing fluff

We titled this year’s survey Fact or Fiction: Perceptions and Misconceptions of AI and Machine Learning and expanded it to include professionals in the enterprise, mid-market organizations and private individuals. It’s one of the largest and most thorough reports on the topic we’ve put together to date and is packed with interesting findings.

Historically, we’ve seen significant confusion surrounding AI and ML. IT professionals are generally aware that they’re in-use, but struggle to voice how they’re helpful or what it is exactly that they do. In Australia, for instance, while the bulk of IT decision makers employ AI/ML-enabled solutions, barely over half (51%) are comfortable describing what they do.

Nevertheless, adoption of AI/ML-enabled technologies continues to rise. Today, more than 93% of enterprise-level businesses report using them. Overall, slightly less than half (47%) call increasing adoption of AI/ML their number one priority for addressing cybersecurity concerns in the coming year.

Here are a few other key takeaways regarding enterprise attitudes toward AI/ML:

- Understanding is growing – But more education is still required, so vendors must focus on benefits of AI/ML in terms of the bottom line and an enhanced security posture.

- AI/ML are key to repelling modern threats – Especially for remote workforces, advanced technologies are emerging as a key component for ensuring uptime and availability for clients.

- AI/ML can differentiate a business – Buyers are looking to invest in their tech stacks to stay out of the headlines for suffering a breach. As understanding of AI/ML grows, more are looking for these capabilities in their cyber defenses.

For the mid-market and individuals, another theme has persisted through our studies: overconfidence.

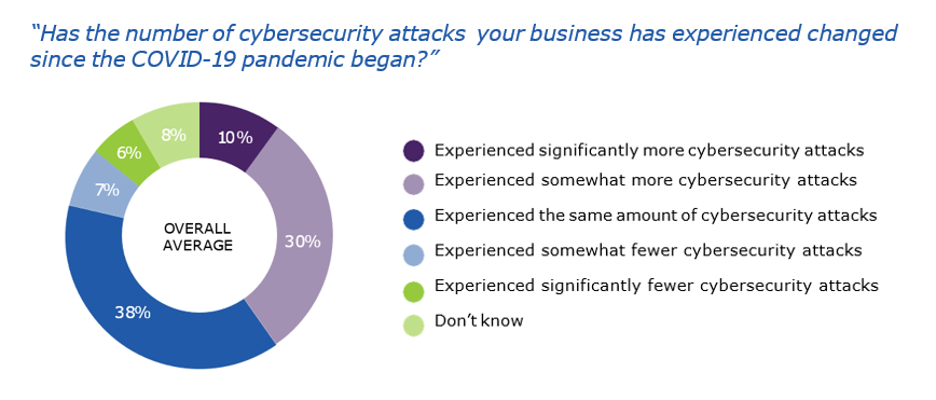

Among IT professionals at businesses with fewer than 250 employees, almost three-quarters (74%) of respondents believe their organizations are safe from most cyberattacks. But 48% have also admitted to falling victim to a data breach at least once. Interestingly, despite their confidence in their cybersecurity, the same respondents also believe their security situation has been worse by COVID-19.

Other notable findings among small and mid-sized businesses include:

- They’re beginning to recognize they’re targets – SMBs are catching onto the fact that cybercriminals pick off weak targets and realizing this fact’s implications for their supply chains.

- Limited IT budgets must be spent wisely – Without the resources to hire full-time IT staff, it becomes critical that a security stack defends against all the most common forms of attack (and their consequences).

- User education is key – If a business can’t spring for top-of-the-line cybersecurity solutions, educating users on how to keep from enabling breaches can go a long way towards building a strong defense with relatively little investment.

Consumers continue to report abysmal habits in their personal online lives. Less than half use an antivirus or other security tool. Only 16% report using a VPN when connecting in public spaces and 48% have had data stolen at least once. On the brighter side, constant headlines concerning corporations leaking consumer data have made consumers wary about who they give their data to and how much. This healthy skepticism is a good sign as the next large data breach is likely just around the corner.

Some valuable learning from the consumer sector, and how it bleeds over into the corporate sector, include:

- Business breaches affect consumers’ data – And they know it. Consumers are wary of providing too much sensitive data to companies after being barraged by news of high-profile hacks and data breaches.

- Consumers ARE NOT taking proper precautions – Fewer than half of home users have antivirus, backup or other cybersecurity measures in place. In all, 11% take no precautions online. This finding is especially relevant if remote workers are using personal devices for business.

- Unsurprisingly, AI/ML knowledge is lacking – When paid IT professionals don’t understand the technology, it may not be practical to expect the average consumer to be. But consumers should do their research on the tech powering their protection before committing to a VPN, antivirus or backup solution.

For the report’s complete findings, including a breakdown of cybersecurity spending by business size, download the full report.